My experience with LLMs

Large Language Models have been occupying my mind a lot this year. I have tried what seems to be every single option under the sun for hosting local LLMs.

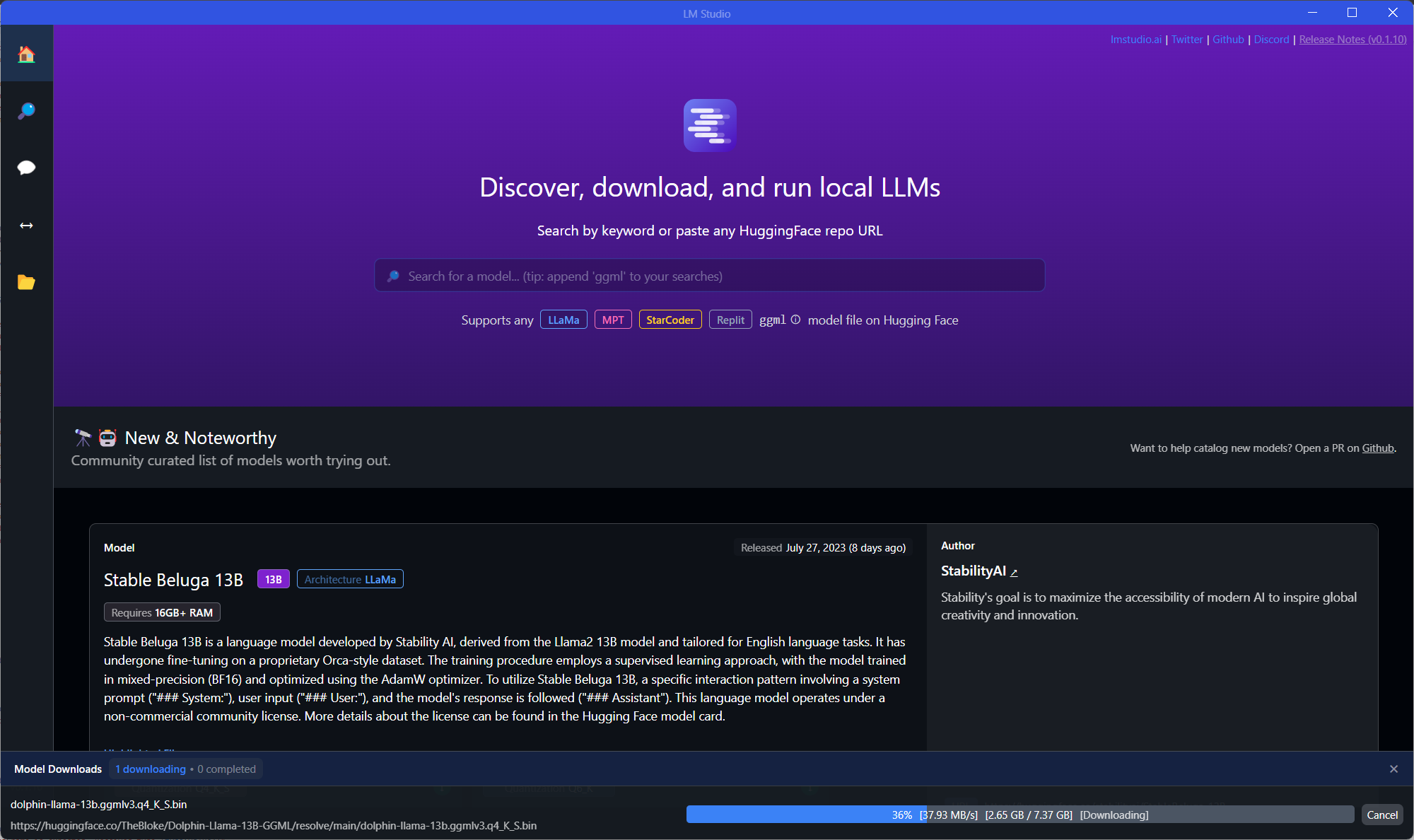

Recently though, I feel like I am embracing the future after I discovered LMStudio. A nice recent addition to the local LLM ecosystem with an emphasis on local access and interoperability and ease of use.

What's not to like?

Why local LLMs

Running a local LLM offers several compelling advantages that appeal to individuals seeking greater privacy, customization, and control over their AI experiences.

Privacy: One of the primary reasons for hosting LLMs locally is the enhanced privacy it provides. By keeping data within your device's boundaries, users minimize the risk of sensitive information being exposed in case of data breaches or hacks. This ensures that your input content and generated output remain private, aligning with growing concerns about personal data security.

Customization: With a local LLM, users enjoy an unparalleled level of customization. They have access to thousands of pre-built models catered to diverse purposes, ranging from creative writing to technical analysis, in various sizes to suit their specific needs. This flexibility allows individuals to choose the model that best suits their requirements and preferences, ensuring a personalized AI experience.

Control: The autonomy offered by running a local LLM is another key selling point. Users have full control over low-level parameters, enabling them to fine-tune performance according to their preferences. They can also access sandboxed functionality that may not be available in cloud-based solutions, granting them greater freedom and the ability to explore advanced features without limitations.

This makes it an attractive choice for those who value data security, seek tailored experiences, and prefer having direct authority over their AI technology.

Tell more me more. I am listening...

We have witnessed a string of advancements that have democratized access to LLMs to a wider audience recently. LMStudio has emerged in this landscape, further simplifying the process of hosting and utilizing LLMs on your local device.

It offers support across platforms, making it an option for Linux, Windows, and MacOS enthusiasts alike. I personally only have tried the Linux verison, and it works. I have found it to be less troublesome than other offerings, and that bodes well for a relatively recent entrant in this space.

Probably the key selling point is the user-friendliness it offers. Unlike previous iterations I had tried at the beginning of the year (like the venerable textgeneration-web-ui and other open-source alternatives), LMStudio offers a streamlined experience that prioritizes ease-of-use.

Its intuitive interface allows non-technical users to set up and interact with their LLMs with little difficulty.

LMStudio offers is a curated collection of large language models from Huggingface. Users can browse and download these pre-selected models knowing that they have some compatibility assurance with the app and the host device.

Another neat feature it provides is an OpenAI-compatible REST endpoint. This unlocks a number of possibilities for developers and power-users.

Wrapping up

LMStudio is carving a place in the world of large language models, offering a one-stop solution for local hosting, a user-friendly interface, and robust integration capabilities.

As new offerings continue to push the boundaries of AI, LMStudio is a breath of fresh air, simplifying our journey towards more intelligent and interconnected applications.